Imagine you own an online store and are offering a “Buy One, Get One Free” deal for first-time shoppers. A user places an order using their real name and gets the free item. Then, they create multiple fake accounts with new emails and phone numbers to repeat the offer again and again. While regular customers enjoy the deal once, this person abuses it ten times, taking unfair advantage of the promotion. It is called bonus abuse, which is more common than it sounds. According to reports, PayPal closed 4.5 million accounts involved in exploiting its sign-up bonus program, which negatively affected its stock value.

What is Bonus Abuse?

In online businesses, especially in sectors like e-commerce, gaming, and fintech, bonus abuse occurs when users exploit promotional offers (like sign-up bonuses, cashback, or referral rewards) multiple times using fake accounts, bots, or manipulated identities. What’s meant to attract new users ends up being a loophole for fraudsters to repeatedly gain unfair benefits, costing companies real money and skewing their marketing data.

Why is it more than just a Marketing loophole?

At first glance, bonus abuse might seem like a harmless way some users try to game the system. But in reality, it can have serious consequences for businesses.

- Financial Impact: Every abused bonus translates to direct monetary loss. When thousands of fake users claim rewards, it drains the marketing budget meant for real customers.

- Skewed Analytics: Marketing teams rely on user data to track campaign success. If fake users inflate conversion rates, it becomes harder to measure real performance or customer behavior.

- Operational Overhead: Investigating suspicious activity, processing fake transactions, and cleaning up bad data adds unnecessary load on fraud and support teams.

- Damaged Brand Trust: Frequent abuse can erode trust, both internally and externally. Genuine users may stop engaging if they feel promotions are easily exploited or unfair.

In short, bonus abuse isn’t just a clever trick; it’s a form of fraud that undermines business growth, drains resources, and opens the door to more serious types of exploitation if left unchecked.

Sectors Most Impacted by Bonus Abuse

Bonus abuse isn’t industry-specific; it adapts wherever promotional incentives are offered. However, certain sectors are particularly vulnerable due to the nature of their customer acquisition strategies and digital infrastructure.

Let’s break down the most impacted sectors and why they’re prime targets:

iGaming / Online Gambling

These platforms frequently offer sign-up bonuses, free spins, or deposit matches to attract players. Fraudsters exploit these by creating multiple fake accounts, often with synthetic identities or stolen credentials, to claim the rewards repeatedly. This behavior skews user analytics, drains marketing spend, and may allow bad actors to launder funds or mask more serious fraud. Risk teams must constantly distinguish between legitimate high-volume players and orchestrated abuse.

Fintech / Digital Banking / Neo-banks

Referral bonuses, cashback on new account creation, and promotional interest rates can be manipulated with fraudulent accounts or identity fraud. Some even use bots to automate the abuse process.

These sectors are highly regulated. Bonus abuse here isn’t just a cost issue; it can trigger compliance red flags. Risk professionals must ensure KYC/AML controls are not bypassed through loopholes in promotion mechanisms.

Loyalty & Rewards Programs

Loyalty systems that offer points, discounts, or freebies are often linked to weak identity checks. Fraudsters register with multiple identities or exploit return policies to earn extra points without genuine purchases.

Abuse can dilute the value of rewards for real customers and lead to budget overruns. Detecting pattern-based abuse across accounts becomes a key challenge for fraud analysts.

Telecommunications (Telcos & ISPs)

Telcos often offer free data, talk time, or device discounts to new customers or referrals. Fraudsters take advantage by using burner devices or SIMs to collect multiple offers. This leads to inflated subscriber metrics, misuse of network resources, and increased churn rates. It is primarily driven by financial motives and facilitated by weak verification, ease of account creation, and technology-enabled deception such as VPNs and device spoofing.

To prevent it, providers must use a combination of robust KYC, technical controls (IP/device checks), behavioral analytics, clear terms, and ongoing monitoring to protect their systems and customers.

Bonus abuse may start small but can quickly scale into organized fraud rings if not proactively addressed. Understanding where and how abuse is most likely to occur is the first step in building effective, data-driven defenses.

Anatomy of a Bonus Abuse Attack

To effectively detect and prevent bonus abuse, it’s important to understand how these attacks are structured, from the types of bonuses targeted to the methods used by fraudsters. Below is a breakdown of the most common attack vectors risk teams should monitor.

Common types of bonuses targeted

Fraudsters typically go after bonuses that offer immediate or easily extractable value. These are often automated or scaled using fake identities, bots, or social engineering tactics.

1. Sign-Up Rewards

Sign-up rewards are incentives offered for registering a new account (e.g., ₹500 for new users). They are easy to replicate using fake accounts or stolen identities. Often exploited in bulk with bots or identity farms.

2. Referral Bonuses

Rewards are granted when a user refers to someone else (e.g., ₹100 each for referrer and referee). Fraudsters create both accounts (referrer and referee) themselves, effectively paying themselves the bonus.

3. Deposit Matches / Top-Up Rewards

Matching the first deposit or top-up (e.g., 100% match up to ₹2,000). Fraudsters deposit small amounts repeatedly across fake accounts to extract value, then withdraw or use them for further fraud.

4. Cashback & Loyalty Point Exploits

Rewards are based on spending behavior or repeated usage. Abusers may trigger fake transactions or reverse/refund purchases while keeping loyalty points or cashback.

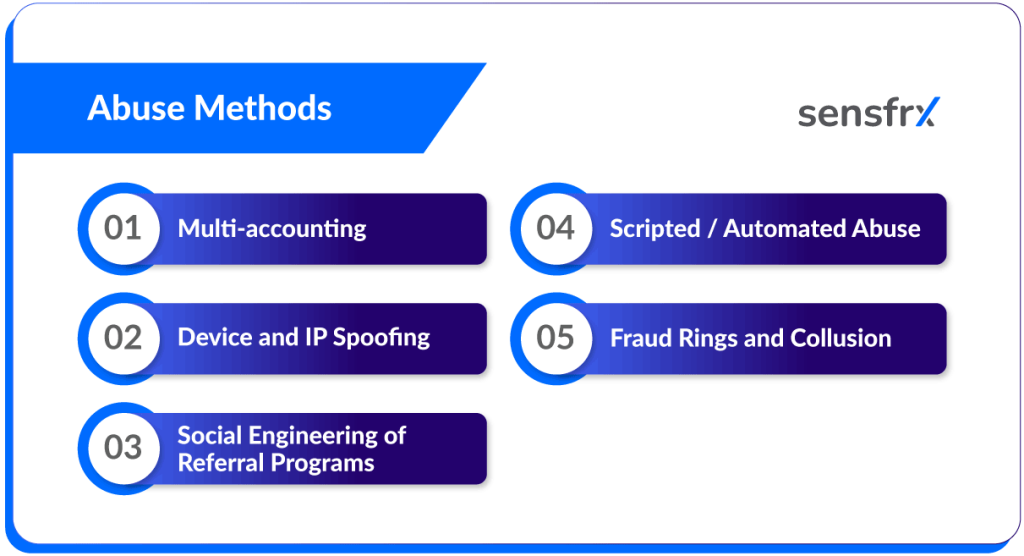

Abuse Methods

1. Multi-accounting

Once a target bonus is identified, fraudsters use a mix of technical and behavioral tactics to bypass detection. These are often layered to avoid raising red flags.

What it is:

Fraudsters create multiple fake accounts using:

- Fake identities

- Stolen personal information (from data breaches)

- Synthetic identities (fabricated details that pass basic checks)

Why it’s done:

Each new account can claim:

- Welcome bonuses

- First-deposit matches

- Free spins or credits (So, a single fraudster can harvest multiple bonuses by posing as different users.)

Why it’s hard to detect:

- Fraudsters use different names, emails, and mobile numbers

- May sign up from different IPs/devices

- Without identity verification (e.g., eKYC) or behavioral analytics, they blend in like regular users

Example: A fraudster uses stolen PAN cards or fake Aadhaar details to open 20 accounts on a gaming platform. Each account gets ₹1,000 in free play credits. The fraudster plays minimally and cashes out winnings using different payment gateways, extracting ₹20,000+ in value.

Read more on Multi-Accounting: What It Is and How to Prevent

2. Device and IP Spoofing

What it is:

Fraudsters hide their real device or location by:

- Using VPNs or proxies to rotate IP addresses

- Changing device fingerprints with emulators or spoofing tools

- Clearing cookies or using incognito browsers

Why it’s done:

To mask identity and appear as a new, unique user each time. Helps them bypass checks that prevent multiple accounts from a single source.

Why it’s hard to detect:

- IPs can look like they come from different cities/countries

- Device-level fraud prevention tools may not be in place

- Hard to link accounts that technically look different

Example: A fraud ring uses mobile device emulators and proxy servers to make each account appear as a different person from different geographies. The system sees “new” users and keeps rewarding them, unaware it’s all the same group.

3. Social Engineering of Referral Programs

What it is:

Fraudsters convince real users to join shady referral schemes, often through:

- WhatsApp or Telegram groups

- Promises of quick money (“Refer this and get ₹500 each!”)

- Sharing referral codes in exchange for partial payouts

Why it works:

- Real people are involved, so the behavior looks genuine

- Referral chains are built with semi-legit activity, making detection tricky

- A fraudster may pay users a small amount to onboard with their code

Why it’s hard to detect:

- Users are real (not fake identities)

- Behavior falls in a gray area—not quite fraud, not quite clean

Example: A fraudster shares their referral link in a Telegram group and promises ₹200 to anyone who signs up and deposits ₹1,000. 50 users do it. The fraudster earns ₹25,000 in referral bonuses, pays ₹10,000 to the participants, and pockets the rest.

4. Scripted / Automated Abuse

What it is:

Fraudsters use bots, scripts, or automation tools to:

- Auto-fill forms and create accounts

- Automatically claim bonuses or complete required steps

- Simulate activity like logging in or playing games

Why it’s done:

- Allows scaling abuse to hundreds or thousands of accounts

- Saves time and effort—machines do the work

- Bypasses weak anti-bot systems

Why it’s hard to detect:

- Scripts can mimic human behavior

- CAPTCHA and basic bot checks may be weak or missing

- Looks like fast but “normal” user activity

Example: A bot registers 500 new accounts using a script that fills in fake details and rotates IP addresses. It clicks verification links and logs in. Each account claims a signup bonus. The whole operation is done overnight while the fraudster sleeps.

5. Fraud Rings and Collusion

Fraud rings operate as organized networks, exchanging compromised data, evasion techniques, and automation tools to systematically bypass controls and carry out large-scale abuse. Often involving collusion, these groups coordinate their actions, share resources, and work together to exploit systems, making detection and prevention significantly more complex.

Why it’s done:

- To execute large-scale abuse across platforms

- To make tracing and attribution difficult

- To ensure long-term profitability before getting caught

Why it’s hard to detect:

- Appears as a distributed activity across many accounts/devices

- Collusion may involve insiders or users helping each other

- Data is often traded in underground marketplaces, making it hard to track

Example: A collusion ring targets 5 different gambling platforms. They use the same methods (multi-accounting, spoofing, automation) but spread across 100 members, each handling a portion. The total fraud loss across platforms crosses ₹50 lakhs before patterns are discovered.

Bonus abuse isn’t random; it follows a pattern. By recognizing the types of offers typically abused and the tactics used, teams can build more resilient systems that detect and stop abuse early in the user journey.

Abuse Scenarios Across High-Risk Industries

Fraudsters tailor their tactics based on the vulnerabilities specific to each sector. Below are common abuse patterns seen across several high-risk industries:

1. Gambling & iGaming

The Gambling and iGaming industry is a prime target for bonus abuse, as platforms frequently offer generous promotions to attract and retain players. Fraudsters exploit these offers using tactics like multi-accounting, fake identities, and syndicate setups to illegitimately profit from welcome bonuses, deposit matches, and referral rewards.

- Welcome bonus multi-accounting: Fraudsters create multiple fake accounts to claim sign-up bonuses repeatedly.

- Fake KYC to cash out promotional winnings: Stolen or synthetic identities are used to bypass Know Your Customer (KYC) checks and facilitate the withdrawal of fraudulent gains.

- Syndicated fraud rings abuse tournaments or deposit promos: Coordinated groups manipulate tournaments or high-value deposit matches to funnel rewards to controlled accounts.

2. Fintech & Neobanks

- Wallet top-up bonuses drained via fake signups: Automated bots or human farms create fake users who receive top-up incentives, which are quickly withdrawn or used in laundering schemes.

3. Loyalty Programs (Retail & Travel)

- Referral loops, discount sharing, and rewards fraud: Users exploit loopholes in referral systems to self-refer or circulate discounts within networks, inflating reward balances without legitimate transactions.

4. E-commerce

- Promo stacking with affiliate/referral arbitrage: Fraudsters stack multiple promotional offers together like discount codes, cashback deals, and referral bonuses in a way that the company didn’t intend. By doing this, they can get a product or service for free, very cheaply, or even make money from the transaction.

Let’s say a company offers the following:

- ₹500 off with a promo code

- 10% cashback via a payment partner

- ₹300 bonus for every referral

A fraudster might:

- Refer themselves using fake accounts to earn the ₹300.

- Use the promo code on that same purchase.

- Pay using a cashback offer to get 10% back.

Possibly even use a coupon site with their affiliate link, earning a commission from their own purchase.

5. Telecom

- Free SIM registrations via fake identities or burner numbers: Fraudsters use fake KYC documents or disposable numbers to obtain multiple free SIMs, often for use in OTP bypass or spam campaigns.

Signals & Red Flags for Bonus Abuse

It is essential to understand the red flags and signals for bonus abuse to better protect your customers and deploy a fraud prevention solution specifically for your needs:

Bonus abusers are increasingly sophisticated—but so are the tools to detect them. By analyzing behavior, device characteristics, and network patterns, platforms can uncover signs that something isn’t quite right. Let’s break down some of the most common indicators of abuse:

1. Behavioral Signals

- Velocity of Account Creation: If dozens of new accounts are created from the same device or location within minutes, it’s a red flag. For example, imagine 50 new sign-ups from a single neighborhood or IP in under 10 minutes—far beyond what you’d expect from organic user activity.

- Identical User Journey Replay Patterns: Bots or fraudsters often automate account activity to quickly claim bonuses. A telltale sign is when multiple users navigate your platform in the same way as logging in, clicking through identical pages, and completing tasks at the same speed. It’s as if someone hit “copy-paste” on their journey.

2. Device & Environment Signals

- Device Fingerprinting Mismatches: Legitimate users tend to access your platform from the same or similar devices. But when a single user’s device fingerprint (technical specs like OS, browser version, screen size) changes drastically between sessions, say, from a Windows laptop to an Android emulator, it may signal suspicious activity.

- Emulator or Jailbroken Device Detection: Fraudsters often use emulators (software that mimics real devices) or jailbroken phones to mask their identity. These setups bypass security controls and make it easier to manipulate apps, which is why their presence during sign-ups should raise concerns.

- Browser Spoofing or Headless Automation: Normal browsers behave in certain predictable ways. But when a browser lacks common features or is running in “headless mode” (used by bots to automate tasks without a visual interface), it’s likely a sign of abuse.

3. Identity and Network Patterns

- Reused KYC/PII Details: Fraud rings often recycle personal information—using the same date of birth, ID number, or name across different accounts, with minor changes (e.g., John Smith vs. J. Smith). These subtle variations aim to bypass basic duplicate checks.

- Disposable Emails and Phone Numbers: Temporary emails and burner phone numbers are commonly used in bonus abuse. They allow fraudsters to create multiple accounts quickly and avoid detection after the initial reward is claimed.

- Suspicious IP Clusters or Proxy Usage: When multiple accounts originate from the same IP address or from regions known for VPNs, proxies, or anonymization services, it’s another red flag. This technique is often employed to conceal a user’s true location or circumvent geo-restrictions.

These signals, especially when seen in combination, suggest that people may be cheating the system to claim bonuses multiple times, rather than acting like normal users. Detecting these red flags helps companies stop bonus abusers early and avoid major losses.

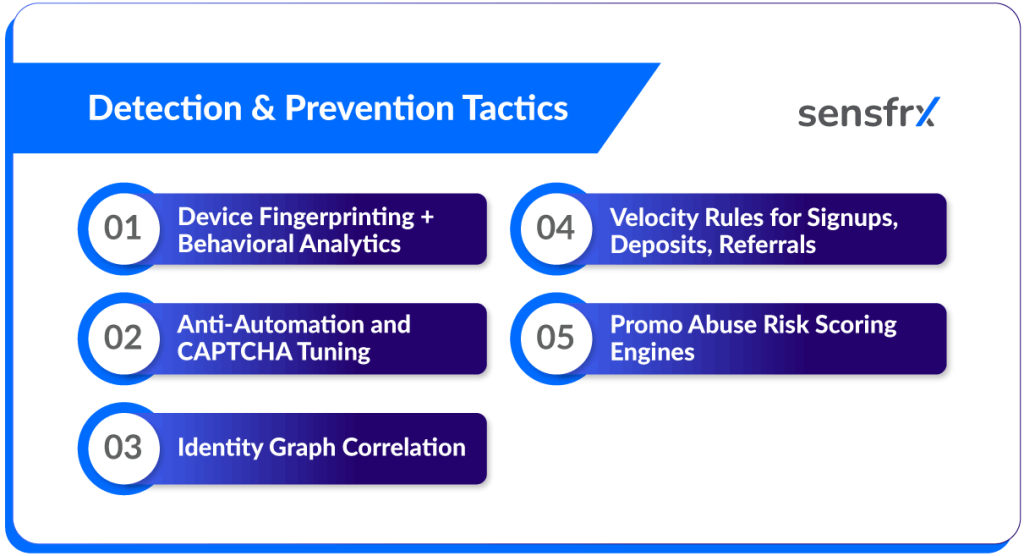

Detection & Prevention Tactics

As bonus abuse becomes more sophisticated, businesses must adopt a multi-layered approach to detect and prevent fraudulent behavior. This section outlines effective strategies, tools, and frameworks that can help teams identify abuse patterns early, reduce financial loss, and protect the integrity of promotional campaigns.

1. Device Fingerprinting + Behavioral Analytics

Device fingerprinting collects granular technical data (e.g., OS, screen size, browser, fonts, time zone) to uniquely identify devices. Combined with behavioral analytics (like typing speed, mouse movement, and click patterns), it helps distinguish real users from bots or repeat abusers.

How to Implement:

- Integrate a device intelligence solution (e.g., Sensfrx) to track device fingerprints across sessions.

- Correlate this with behavioral analytics tools that analyze session patterns in real-time.

- Set alerts for anomalies such as high device churn, multiple accounts from the same fingerprint, or robotic browsing behavior.

2. Anti-Automation and CAPTCHA Tuning

CAPTCHAs and bot mitigation tools help stop scripted attacks and headless browser automation used in mass bonus abuse.

How to Implement:

- Use adaptive CAPTCHA (e.g., Google reCAPTCHA v3 or hCaptcha) that adjusts difficulty based on user behavior and risk level.

- Deploy bot protection tools like Cloudflare Bot Management or PerimeterX on key workflows: signup, login, and bonus claim.

- Monitor CAPTCHA success rates to avoid false positives and fine-tune thresholds to minimize user friction.

3. Identity Graph Correlation

An identity graph links accounts across shared or similar data points, PII (like phone numbers, emails), devices, payment instruments, and IP addresses, to identify collusion or multi-accounting attempts.

How to Implement:

- Build or integrate an identity graph engine (some platforms like Ekata, Alloy, or Deduce provide this as a service).

- Create logic to flag accounts that share multiple weak signals (e.g., same DOB + device + IP) or repeat PII patterns.

- Use graph-based visualizations to investigate clusters of abuse and build custom rules.

4. Velocity Rules for Signups, Deposits, Referrals

Velocity checks monitor the speed and frequency of specific actions (e.g., how many signups happen from a location per hour) to detect bursts of suspicious activity.

How to Implement:

- Set baseline thresholds using historical traffic data: e.g., “max 5 signups per IP per hour” or “max 2 referrals from the same phone in 24 hours.”

- Implement rules within your fraud engine or rule-based system to auto-flag or block excessive actions.

- Continuously tune thresholds based on seasonal trends or campaign periods to reduce false positives.

5. Promo Abuse Risk Scoring Engines

A risk scoring engine assigns a score to each user or session based on signals (device, behavior, identity, velocity, IP reputation). High-risk scores trigger manual review or auto-blocks.

How to Implement:

- Build a scoring model internally or integrate third-party solutions (e.g., Kount, Riskified, or custom models via Snowflake + ML tools).

- Use weighted factors (e.g., burner email = +15, reused device = +20) to determine thresholds for intervention.

- Continuously retrain and validate the model with feedback from fraud investigations to improve accuracy.

6. Adaptive Bonus Eligibility Logic

Rather than giving out bonuses blindly, use conditional logic to adjust bonus eligibility based on user trust level, activity history, or risk score.

How to Implement:

- Create a tiered eligibility system:

- Tier 1 (low risk): Full bonus unlocked immediately

- Tier 2 (medium risk): Partial bonus with usage conditions (e.g., verified deposit or KYC)

- Tier 3 (high risk): No bonus or delayed bonus until manual verification

- Integrate this logic into your backend promotions engine, pulling real-time risk signals to trigger bonus eligibility decisions.

- Run A/B tests to ensure user engagement is not heavily impacted for genuine users.

Operational Strategies for Risk Teams

Beyond detection tools and risk engines, operational strategy plays a crucial role in mitigating bonus abuse. Risk teams that collaborate with product, engineering, and customer support can create robust systems that reduce abuse while preserving a good user experience.

1. Aligning Product & Fraud Ops to Validate Bonus Logic

What does it mean: Many bonus abuse issues arise because promotional rules are too broad or poorly enforced in code. If product and fraud teams aren’t aligned during promo design, loopholes can easily be exploited.

How to implement:

- Embed a fraud review in the bonus campaign approval process. Risk ops should have a checklist to validate logic like:

- Are bonus conditions clearly defined (e.g., one per user/device/IP)?

- Can the system detect repeat claims across variants (like email + phone mismatches)?

- Are thresholds for suspicious activity in place (e.g., max claims per day per location)?

- Run pre-launch simulations using test accounts to probe potential abuse paths.

- Ensure that engineering teams implement bonus limits at the backend, not just on the frontend (which can be bypassed).

2. Manual Review Workflows for Flagged Cases

What does it mean: Not all flagged accounts should be auto-banned. A manual review process helps investigate borderline cases, especially if the account shows signs of both real and suspicious behavior.

How to implement:

- Set up a case queue in your fraud management platform (e.g., Sift, Arkose, or even internal dashboards).

- Prioritize cases based on risk score, transaction value, and potential campaign impact.

- Create a standard review checklist that includes:

- Cross-checking device and IP history

- Looking for recycled PII

- Reviewing referral relationships

- Flagging unusual bonus claim patterns

- Train fraud analysts on how to make final decisions (approve, restrict, or escalate).

3. Cross-Platform Abuse Detection (Web + Mobile)

What does it mean: Fraudsters often switch between web and mobile apps to bypass detection systems that only focus on one platform. Ensuring cross-platform visibility is key to identifying multi-vector abuse.

How to implement:

- Use a centralized fraud detection system that aggregates data from both platforms—device IDs, session history, sign-up flow, etc.

- Normalize user identifiers across platforms (e.g., match mobile device ID with browser fingerprint).

- Implement shared blacklist/flag lists so that an account flagged on web is automatically restricted on mobile, and vice versa.

- Ensure behavior tracking (e.g., click paths, timing) is equally implemented across platforms for parity in analysis.

4. Honeypot Bonuses to Trap Abuse Actors

What does it mean: A honeypot bonus is a fake or unattractive offer meant to bait fraudsters. Legitimate users usually won’t go for it, but abusers looking to exploit any promo will.

How to implement:

- Create a low-value or hidden bonus that only appears to users who meet suspicious patterns (e.g., disposable email, emulator use).

- Monitor uptake. If someone tries to redeem it, automatically flag them for review or escalation.

- Tag devices/accounts that interact with the honeypot for future risk scoring.

- Important: Do not mislead genuine users. Honeypots should be selectively triggered, not broadly advertised.

5. Using AI to Spot Coordinated Referral Ring Behavior

What does it mean: Referral abuse often involves coordinated rings—groups of accounts referring each other in loops to repeatedly claim bonuses. AI/ML models can identify these non-obvious relationships.

How to implement:

- Use clustering algorithms or graph-based models (e.g., using tools like Neo4j or Snowflake + ML pipelines) to map account connections based on:

- Shared devices, IPs, or KYC data

- Referral code reuse patterns

- Timing of sign-ups and logins

- Train models to flag “unnatural” referral chains, like 10 accounts signing up within an hour using the same referral code from a known bad actor.

- Feed these outputs into your case management system for analyst review or automatic blocking.

Operational strategies ensure that fraud detection isn’t just a technical afterthought—it becomes part of the product’s DNA. When risk teams align with product, engineering, and data science, they can not only stop abuse more effectively but also design smarter, safer user incentives from the start.

Tooling Ecosystem: What Tools Help You Detect Bonus Abuse?

Technology plays a big role in detecting and stopping bonus abuse. But, with so many tools out there, it can be overwhelming to know what does what. Here’s a breakdown of the most useful categories of tools and how they fit into your fraud prevention strategy.

1. Digital Footprinting

Digital footprinting tools collect and analyze details about a user’s device and behavior, like the phone or computer they’re using, their browser type, location, and how they interact with your site. This helps tell whether the person is real or part of a fraud ring. Let’s say someone signs up 10 times using different email addresses. If all 10 accounts come from the same device or behave the same way, digital footprinting tools can catch that, even if the usernames and emails look different.

How to use it:

- Plug the tool into your sign-up and login process.

- Track things like IP address, device fingerprint, screen size, and language settings.

- Use the data to flag suspicious users (like reused devices or bots pretending to be real users).

2. Referral Program Abuse Detection Vendors

These tools are built specifically to detect when people are gaming your referral programs, like referring themselves using fake accounts or creating fake “friends” to claim rewards.

Referral abuse is tricky to spot with basic tools. These vendors use smarter methods—like checking device history, IP overlaps, and timing patterns—to catch bad actors.

How to use it:

- Choose a vendor that fits your system (e.g., Tremendous, Aampe, or specialized fraud solutions with referral abuse features).

- Set rules like “only one reward per device” or “no reward if KYC fails.”

- Monitor for clusters of users referring to each other in circles or sharing common contact info.

3. In-House Rules Engines vs. Third-Party Intelligence Feeds

You can either build your own fraud rules system (in-house) or use external tools that give you updated fraud data (third-party feeds).

In-house rules engine:

- You define the rules: e.g., block sign-ups after 5 attempts from the same IP.

- Great for specific use cases and fast updates, but it requires ongoing maintenance.

Third-party feeds:

- These provide real-time fraud signals, like known bad IPs, burner emails, or stolen IDs.

- Easier to use but less customizable.

How to choose:

- Use in-house rules for tailored logic, like promo eligibility or behavioral limits.

- Use third-party feeds to quickly block known threats from the wider web.

- Best case: combine both for a flexible and well-informed fraud defense.

4. Limitations of Relying Only on CAPTCHA or OTPs

CAPTCHAs (those “I’m not a robot” tests) and OTPs (one-time passwords sent to your phone/email) are often used to stop bots or fake accounts. But they’re not enough on their own because:

- Fraudsters can now solve CAPTCHAs using bots or cheap human labor.

- OTPs can be bypassed with fake numbers or automation tools.

- Smart fraudsters can even create thousands of accounts using virtual phone numbers.

What to do instead:

- Treat CAPTCHA and OTP as basic checks, not final barriers, since their relevance is slowly becoming outdated.

- Always combine them with device fingerprinting, IP tracking, and behavioral analytics.

- Review suspicious patterns, such as repeated OTP requests or OTPs sent to burner numbers.

You should think of your tooling ecosystem like layers of a security system:

- Basic tools (CAPTCHA, OTP) are your front gate.

- Fingerprinting and behavior tracking are like motion sensors.

- Referral detection and intelligence feeds are your security cameras watching for coordinated attacks.

Using these tools together gives your risk team the visibility and power to stop abuse before it causes serious damage.

Conclusion

Bonus abuse remains a significant challenge in the online gambling and gaming industries, posing threats to both operators and legitimate players. Defined as the unethical exploitation of promotional offers, bonus abuse includes tactics such as multi-accounting, exploiting loopholes, bonus stacking, and using fake identities. These practices not only result in financial losses and skewed marketing data for businesses but also damage the integrity and trust within the gaming community.

To combat bonus abuse effectively, operators must implement comprehensive prevention strategies. This includes strict KYC and AML measures to verify customer identities and track suspicious activities. Advanced technologies like AI, machine learning, and behavioral analytics are essential tools for detecting and mitigating fraudulent behaviors in real-time.

The future of bonus abuse prevention will be shaped by emerging trends such as increased collaboration among gambling sites and the implementation of stricter terms and conditions. However, these measures come with challenges, including potential backlash from players and the continuous evolution of abuse tactics by fraudsters. Evolving regulations, including stricter KYC, AML requirements, and enhanced data protection laws, play a critical role in shaping the industry’s approach to preventing bonus abuse.

Sensfrx is a powerful fraud prevention platform that analyzes thousands of signals in real time to detect suspicious patterns and raise instant red flags. From spotting fake signups to identifying collusion and bonus abuse, Sensfrx helps protect your promotions from being exploited. Book a free trial to see how it can safeguard your business.

FAQs

Bonus abuse is the exploitation of promotional offers—like welcome bonuses or top-up rewards—using fake or duplicate accounts for unfair financial gain.

They use bots, scripts, or device farms to rapidly create accounts, simulate human behavior, and trigger multiple promotions at scale.

Clusters of accounts with shared IPs or devices, unrealistic signup bursts, fake user details, and quick reward redemptions are strong indicators.

Yes, AI models analyze patterns in user behavior, device use, and transaction timing to flag suspicious activities as they happen.

Use smart verification, behavioral analytics, velocity checks, and progressive rewards to differentiate legitimate users from fraudsters.