If you want to defeat the bots, you must first understand what it is like to be one. Bots, these tireless, highly efficient and often invisible digital entities, operate in the background of the internet, executing tasks with a speed and precision that humans cannot match. Despite their advanced capabilities, bots follow a relatively simple structure. At their core, a bot is driven by a specific task, guided by clear instructions, and equipped with interaction capabilities to navigate and manipulate the online environment.

Bots come in various forms, from basic scripts that perform repetitive actions to sophisticated programs that mimic human behaviour almost perfectly. Many bots are benign, automating routine tasks such as scheduling social media posts or crawling websites to index content for search engines. However, numerous bots are designed with malicious intent, posing a significant threat to online security.

Recent studies, like those from Imperva’s 2023 Bad Bot Report, show that malicious bots account for nearly 30% of internet traffic, with sophisticated evasion techniques on the rise. This blog explores the bot landscape, detection challenges, practical strategies, and future trends in bot management.

Enter SensFRX; A next‑generation bot‑management solution that combines behavioural analytics, machine‑learning classifiers, and real‑time threat intelligence to spot both known and emerging automated threats. Unlike traditional rule‑based firewalls, SensFRX continuously learns from traffic patterns, making it especially adept at catching bots that employ advanced evasion techniques. As bots become more human‑like, detection solutions must also evolve. SensFRX’s blend of AI‑driven anomaly detection, continuous learning, and privacy‑preserving data collection exemplifies the next wave of defensive tools—ones that can adapt in real time without sacrificing user experience.

Now let’s delve into the depth of the issue.

The Current Bot Landscape

Bots now make up a huge slice of internet traffic. According to Distil Networks’ 2022 report, automated traffic accounted for 42 % of global web activity. Malicious bots target sectors such as e‑commerce (price‑scraping), social media (fake accounts) and gaming (credential‑stuffing).

Bad bots include scrapers that steal content, spammers that flood forums, and bots that launch distributed‑denial‑of‑service (DDoS) attacks.

Good bots – for example Google’s web crawlers or customer‑service chatbots – help make the web more efficient and accessible.

Why bots exist

- Financial profit – many bots are built for ad fraud, stealing credit‑card details or phishing.

- Data collection – some harvest email addresses and personal information to fuel spam campaigns.

- Disruption – a few are designed simply to overwhelm servers, causing outages and revenue loss.

- Competitive intelligence – bots can scrape pricing or customer reviews, giving their owners a market edge.

- Manipulation – bots may artificially boost views, likes or spread false information to sway public opinion.

As bots become more sophisticated, defending against them grows ever more critical.

Sector‑specific impacts

- E‑commerce – scalper bots hoard limited‑edition items, creating artificial scarcity; card‑testing bots try stolen card numbers at checkout, leading to chargebacks; price‑scraping bots gather product data for rivals to undercut prices. The result is not just financial loss, but also damage to reputation and customer loyalty.

- Social media – fake accounts inflate follower counts, likes and engagement, giving a false impression of popularity. These bots also spread misinformation and run coordinated spam attacks, making it hard for platforms to distinguish genuine interaction from automation.

- Online gaming – bots automate cheating, farm resources and take over accounts, undermining fair play and the overall gaming experience.

These examples show how varied and evolving bot threats are, underscoring the need for tailored defence strategies that address the specific risks each industry faces.

| Industry | Key Bot-Related Threats | Potential Impact |

| E-commerce | Price scraping, inventory hoarding, scalping, card testing | Lost revenue, reputational damage, customer frustration, chargebacks |

| Social Media | Misinformation, fake engagement, spam, harassment | Eroded user trust, damaged brand reputation, distorted public discourse |

| Gaming | Cheating, resource farming, account takeover | Unfair gameplay, disrupted in-game economy, loss of player base |

| Financial Services | Credential stuffing, account takeover, payment fraud | Direct financial theft, data breaches, regulatory fines |

| Travel & Hospitality | Price scraping, booking manipulation | Revenue loss, unfair competition, customer dissatisfaction |

Challenges in Bot Detection

Bots are great at fast, precise, repetitive tasks, but they struggle with anything unpredictable – especially subtle human behaviour and complex problems. Knowing where bots shine and where they falter helps you build detection methods that target their weak points.

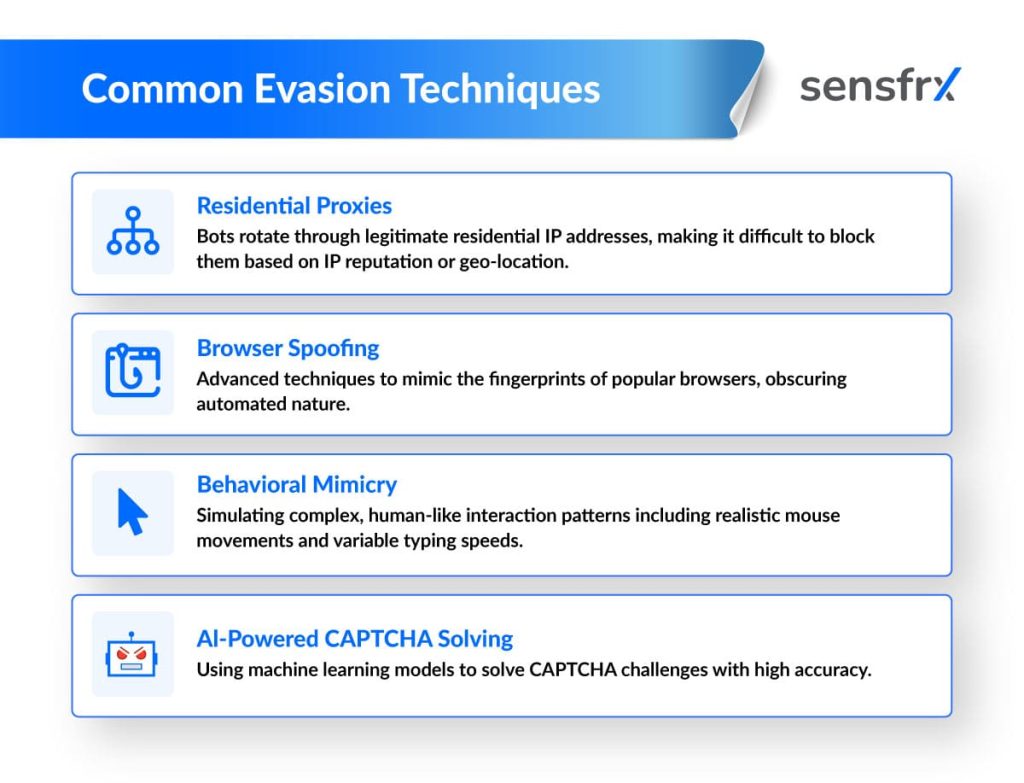

Core detection ideas

- Behavioural analysis – looks at how a user moves, clicks and scrolls. Bots often jump from page to page in a split second or follow mouse paths that don’t feel natural. Spotting these odd patterns can raise an alert.

- Honeypots – hidden elements such as invisible links or form fields that normal visitors never see. When a bot interacts with them, it triggers a log entry, making the bot easy to identify and block.

- Challenge‑response tests (CAPTCHAs) – ask the visitor to solve a task that’s simple for a person (such as recognise objects in an image) but hard for a script. Basic bots usually fail these tests, although recent AI‑driven solvers have reduced their effectiveness against more advanced bots.

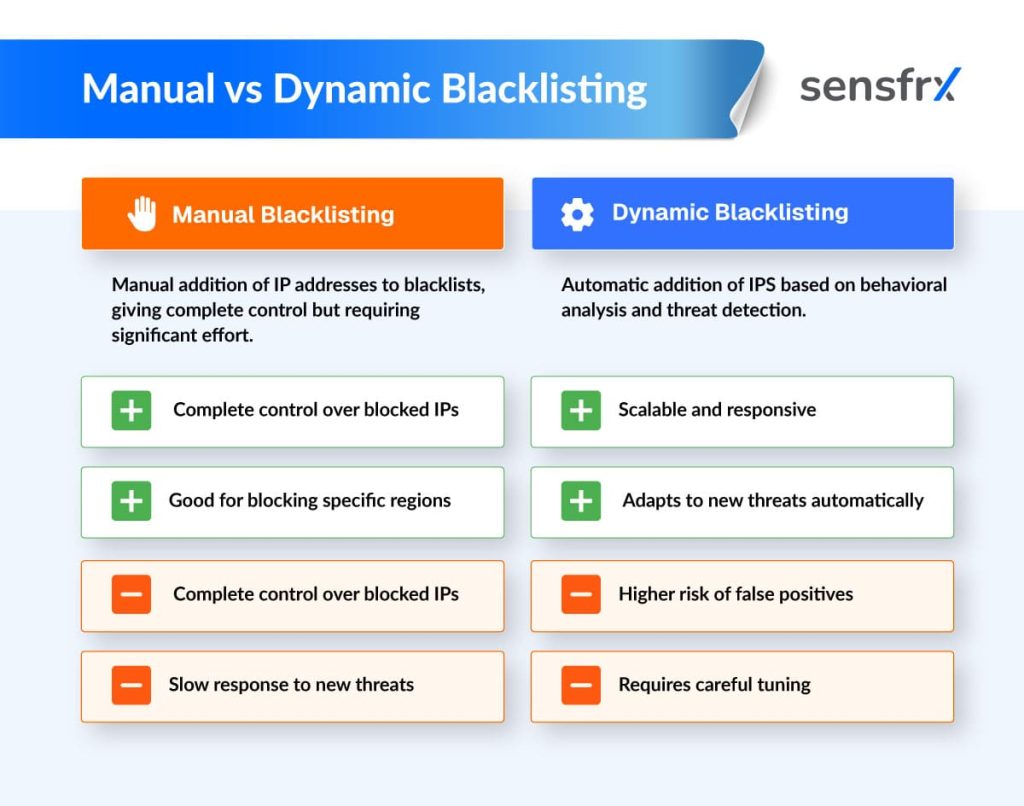

- IP‑address monitoring – checks where requests originate. A sudden burst of traffic from a single IP or from addresses known for malicious activity can be flagged and, if needed, black‑listed.

Balancing security with a smooth user experience is essential; overly aggressive checks can frustrate genuine users, while too‑lenient measures let bots slip through. By focusing on the areas where bots are most vulnerable – erratic timing, unnatural mouse movements, interaction with hidden traps, and difficulty solving human‑oriented challenges – you can create a layered defence that keeps both your site and its visitors safe.

User agent scrutiny evaluates browser and software metadata for discrepancies, since many bots fail to replicate authentic strings convincingly.

Studies from 2023-2025, including those of Cloudflare, highlight ML models trained on vast datasets to predict bot-like patterns with over 95% accuracy, adapting in real-time to evasion tactics. Techniques like device fingerprinting, analysing hardware, OS, and session traits, have gained traction, proving resilient against VPNs and proxies. Graph neural networks (GNNs) are emerging for mapping bot networks via interconnected behaviours.

Moreover, zero-trust architectures and server-side rendering challenges are recommended to counter headless browser bots. Experts advocate multi-layered systems integrating these with traditional methods, since hybrid AI bots now mimic human unpredictability, per a 2024 SANS Institute report. Continuous monitoring and updates are essential amid evolving threats.

Understanding Bot Threats & Detection Techniques

When it comes to mitigating bot‑driven threats, the cornerstone is a multi‑layered architecture that simultaneously immobilizes malicious automation and preserves the seamless experience of bonafide users. A comprehensive bot‑prevention strategy orchestrates a suite of complementary detection mechanisms which ranges from network‑level rate limiting and reputation filtering to behavioural analytics, adaptive challenge‑response, and post‑incident containment strategies into a cohesive, resilient defence posture.

| Layer | What It Does | Typical Tools / Techniques |

| Network‑level filtering | Blocks traffic from known malicious IP ranges, bots using data‑center proxies, or abnormal request patterns. | IP reputation services, geo‑blocking, rate‑limit rules, CDN edge firewalls. |

| Behavioural analysis | Monitors how users interact with the site (mouse movement, typing cadence, navigation flow) to spot non‑human patterns. | JavaScript‑based fingerprinting, anomaly detection models, heat‑map analytics. |

| Challenge‑based verification | Presents tasks that are easy for humans but hard for automated scripts. | CAPTCHAs (image, audio, invisible), puzzle challenges, “prove you’re not a bot” JavaScript challenges. |

| Credential protection | Stops bots from brute‑forcing login or account‑creation endpoints. | Adaptive throttling, account lockout after failed attempts, password‑less login (magic links, WebAuthn). |

| Content‑level safeguards | Protects specific assets (forms, APIs) from automated abuse. | CSRF tokens, honeypot fields, signed request timestamps, API keys with usage quotas. |

1. Perimeter Hardening

At the network edge, static defences such as rate limiting (such as 100 requests per minute per IP) and IP reputation services filter out known malicious sources. While coarse, these measures lower the volume of traffic that reaches deeper layers, conserving computational resources for more sophisticated analysis.

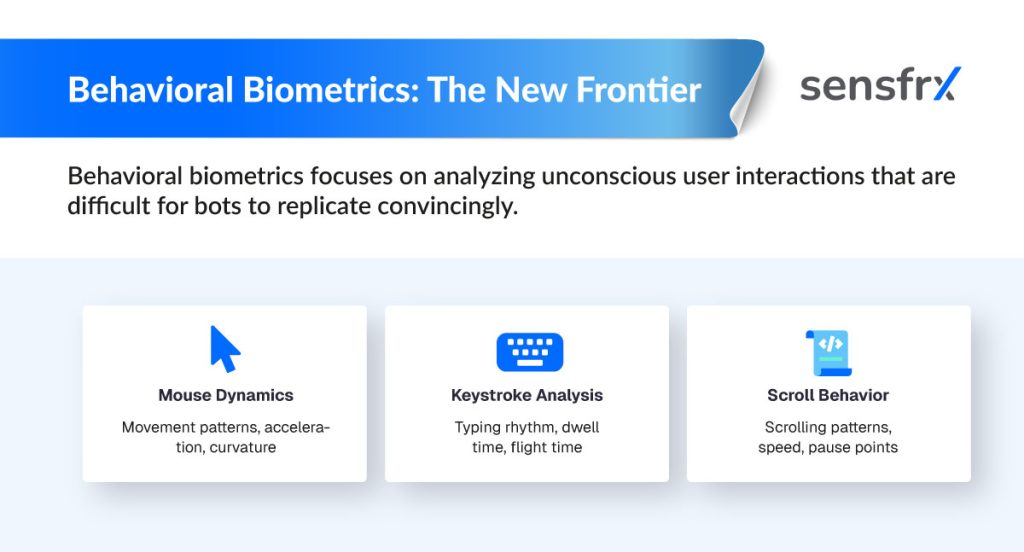

2. Behavioural Analysis

Modern bots emulate human interaction, necessitating behavioural biometrics. Features include:

- Inter‑event intervals

- Cursor trajectory curvature

- Touch‑screen pressure variance

Supervised classifiers (such as random forests, gradient‑boosted trees) trained on labelled traffic can achieve precision > 0.95 while maintaining low false‑positive rates (< 1 %). Unsupervised anomaly detection (such as isolation forests) complements supervised models by flagging novel attack patterns.

3. Challenge‑Response

When a request is flagged as suspicious, a challenge is issued. CAPTCHAs remain prevalent, but newer proof‑of‑work schemes (such as Hashcash‑style puzzles) impose a verifiable computational burden. The challenge difficulty can be adaptive, scaling with the confidence score from the behavioural layer to avoid unnecessary friction for genuine users.

4. Post‑Detection Mitigation

If a bot circumvents earlier layers, runtime safeguards limit the impact:

- Rate‑based throttling on compromised accounts

- Multi‑factor authentication prompts after anomalous activity

- Automated credential rotation for high‑risk services

These controls contain the breach and provide forensic data for future model refinement.

Integrative Benefits

- Redundancy: Failure of any single layer does not collapse the entire defence.

- User Experience Preservation: Legitimate traffic experiences only the minimal necessary friction, as challenges are invoked selectively based on confidence scores.

- Continuous Learning: Behavioural models can be retrained on post‑detection data, improving detection of evolving bot tactics.

The Sensfrx Advantage: A Smarter Way to Block Bots

AI-Powered Behavioural Analysis

Sensfrx takes a smarter, more effective route to bot detection by using AI‑driven behavioural analysis instead of static rules or signatures. It builds a unique profile for every visitor by examining mouse movements, keystrokes, navigation patterns and other subtle interaction cues that bots find hard to copy.

By focusing on how a user interacts rather than just what they do, Sensfrx can spot even the most sophisticated bots that try to imitate human behaviour. Its AI‑powered Cognitive Engine continuously learns from a massive dataset of both human and bot traffic, picking up the faint patterns that separate the two.

The system adapts in real‑time, new and emerging bot threats are detected and blocked automatically, without the need for manual rule updates. This proactive, AI‑based approach offers a far more robust and reliable defence against bots than traditional, signature‑based methods.

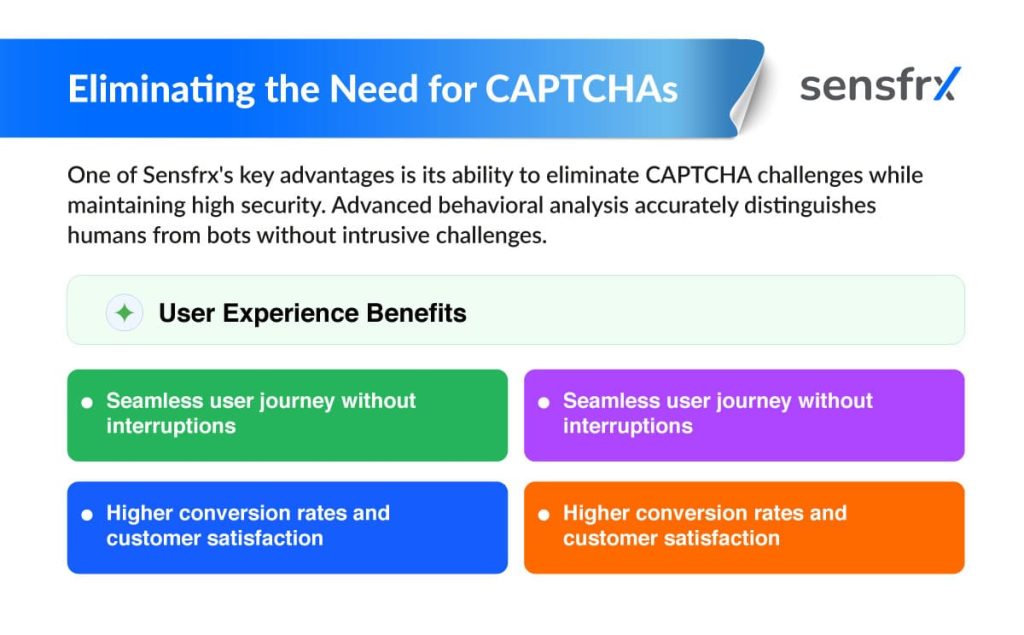

Eliminating the Need for CAPTCHAs

One of the key advantages of Sensfrx is its ability to eliminate the need for CAPTCHAs. As discussed earlier, CAPTCHAs can be a source of friction for users and are becoming less effective against modern bots. By using advanced behavioural analysis, Sensfrx can accurately distinguish between humans and bots without the need for intrusive challenges. This creates a more seamless and enjoyable user experience, which can lead to higher conversion rates and increased customer satisfaction.

Sensfrx achieves this by analysing a wide range of behavioural signals that are unique to humans. These signals are difficult for bots to replicate, even with the use of AI. By focusing on these subtle cues, Sensfrx can make a highly accurate determination of a user’s humanity without the need for a CAPTCHA. This not only improves the user experience but also provides a higher level of security, as it is not susceptible to the same bypass techniques as traditional CAPTCHAs. By eliminating the need for CAPTCHAs, Sensfrx provides a win-win solution for both businesses and their customers.

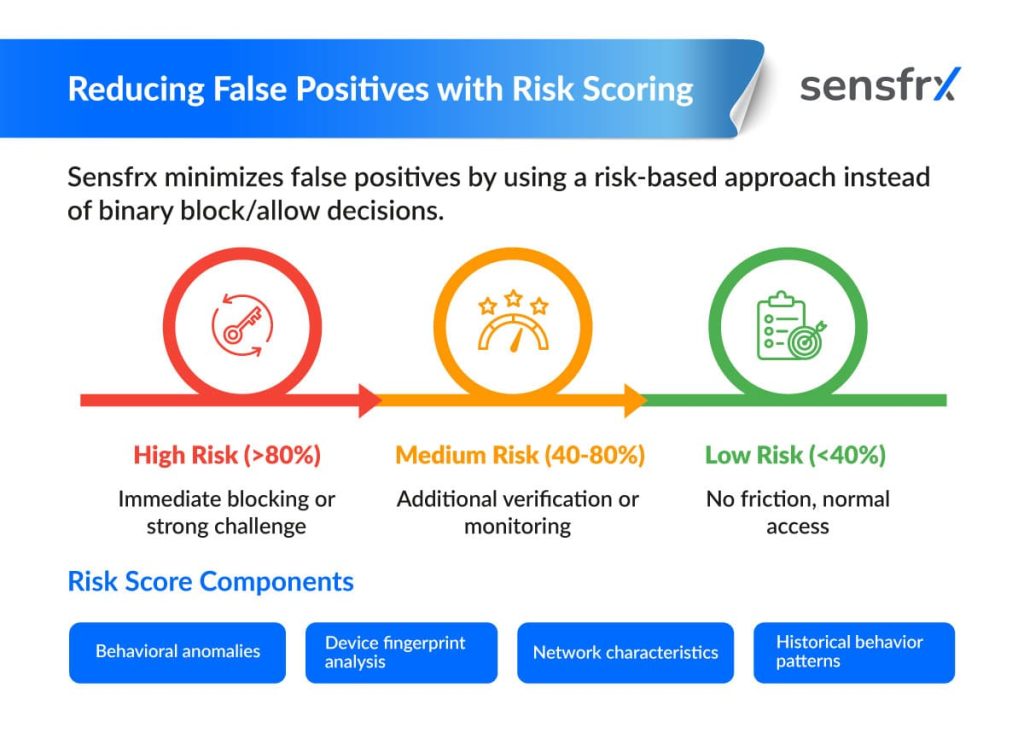

Reducing False Positives with Risk Scoring

Sensfrx aims to keep false positives to a minimum by using a risk‑based approach rather than a simple “block or allow” decision. Each visitor receives a risk score that reflects their behaviour and other signals.

- Tiered response

- High‑risk users are blocked outright.

- Medium‑risk users are presented with a challenge (such as a CAPTCHA).

- Low‑risk users pass through without any friction.

This graduated system means fewer genuine customers are mistakenly stopped, improving the overall user experience and boosting revenue.

The risk‑scoring engine is powered by machine‑learning models that are continuously refined. It evaluates a broad set of factors, including behavioural anomalies, device fingerprints and network characteristics. By merging these signals into a single score, Sensfrx can make far more nuanced and accurate decisions than traditional rule‑based solutions.

The result is a high level of security that doesn’t compromise usability: businesses protect their assets while legitimate customers enjoy a smooth, uninterrupted experience.

Common Pitfalls and Best Practices

- Bot management requires careful balancing to avoid common pitfalls:

- Over-Blocking: Aggressive filtering can block legitimate users. Use adaptive thresholds and tune CAPTCHAs to minimise disruption.

- Incomplete Coverage: Ensure detection systems account for mobile traffic and emerging threats like AI-driven bots.

- Outdated Defences: Regularly update blacklists, models, and threat intelligence to counter evolving tactics.

- Best practices include adopting layered defences, monitoring traffic continuously, and using Sensfrx for advanced features such as progressive rate limiting and credential stuffing prevention.

Future Trends

Bot threats are evolving rapidly, driven by advancements in AI and automation. AI‑generated bots create realistic multimedia content, evade detection with proxies, and mimic complex human behaviours. Consequently, self‑supervised learning and multimodal detection (combining text, behaviour, and device data) improve accuracy. Nonetheless, collaborative datasets and cross‑platform solutions enable shared threat intelligence. Real‑time, device‑level tracking and anomaly‑detection systems will enhance bot management. Staying ahead requires proactive adoption of these technologies and continuous refinement of detection strategies.

Conclusion

Effective bot management is essential for protecting digital platforms from malicious automated threats. By blending advanced detection techniques, practical blocking strategies, and continuous monitoring, organizations can safeguard their systems and users. As bots grow more sophisticated, hybrid approaches combining machine learning, threat intelligence, and adaptive defences will be critical. Stay vigilant, leverage modern tools, and adopt layered strategies to ensure ongoing protection in an ever-evolving threat landscape.

FAQ’s

Yes, effective bot management involves a multi-layered architecture that combines various detection and blocking strategies. These include network-level filtering (rate limiting, IP reputation services), behavioural analysis, challenge-based verification (CAPTCHAs, proof-of-work schemes), credential protection, and content-level safeguards (CSRF tokens, honeypot fields). Solutions like SensFRX use AI-driven behavioural analysis to detect and block bots without relying on static rules.

Yes, the document highlights that AI-generated bots are evolving rapidly, creating realistic multimedia content and mimicking complex human behaviours to evade detection. Blocking these sophisticated AI bots is crucial for protecting digital platforms from malicious automated threats. The document emphasizes the need for advanced, adaptive defences that can counter these evolving AI-driven tactics.

A bot detector is a system or method used to identify and distinguish automated traffic (bots) from legitimate human users on a website or digital platform. The document describes various “core detection ideas” that serve as bot detectors, including behavioural analysis, honeypots, challenge-response tests (CAPTCHAs), IP-address monitoring, user agent scrutiny, machine learning models, device fingerprinting, and graph neural networks (GNNs). SensFRX is presented as a next-generation bot-management solution that acts as a sophisticated bot detector.

Yes, blocking bots is essential and highly beneficial. The document details the significant negative impacts of malicious bots across various sectors, such as financial loss, reputational damage, customer frustration, unfair gameplay, and the spread of misinformation. By effectively blocking bots, organizations can safeguard their systems, protect user data, preserve a fair and secure online environment, and maintain customer trust and loyalty. The document concludes that “Effective bot management is essential for protecting digital platforms from malicious automated threats.

Future trends include the use of AI-generated bots that create realistic content and evade detection. To counter this, advancements will focus on self-supervised learning, multimodal detection (combining text, behavior, and device data), collaborative datasets for shared threat intelligence, and real-time, device-level tracking and anomaly-detection systems.