The core questions have become more fundamental: Is the customer on the video call a real person or a digital puppet? Is the product review genuine or generated by a machine? Is the shopfront legitimate, or is it a phantom enterprise built entirely by AI? This report provides a comprehensive framework for e-Commerce businesses to navigate this new terrain, detailing the technology of deception, the vulnerabilities it exploits, and the multilayered defence required to protect against it.

Online product reviews significantly influence consumer purchasing decisions, with approximately 95% of consumers consulting them before making a purchase. However, the integrity of these reviews is increasingly compromised. Merchants, aiming to boost sales and platform rankings, engage in practices such as commissioning positive reviews, orchestrating negative campaigns against competitors, or purchasing fabricated endorsements. This manipulation undermines market transparency, erodes trust between consumers, and disadvantages honest sellers.

Scale of the Problem

At the forefront of this transformation is the rise of deepfakes, a hyperrealistic synthetic media that can convincingly impersonate real individuals in video, audio, and images. For the e-commerce sector, which is built on a foundation of trust between consumers and businesses, this development represents not just an evolution of fraud but a potential authenticity crisis.

The threat is no longer theoretical or distant; it is a present, scalable, and highly effective tool for cybercrime. The technical and financial barriers to creating believable fraudulent content have collapsed, enabling malicious actors to launch sophisticated social engineering attacks at an unprecedented scale. Statistics paint a stark picture of this new reality.

Global fraud attempts are growing 21% year over year, and deep-fakes are now involved in 1 of every 20 failed identity verification attempts. The financial services sector, an analogue of e-commerce in terms of security requirements, is already a primary target, bearing 40% of all false attacks. The projections indicate that fraud losses in e-Commerce alone could reach $107 billion annually by 2029, propelled by these AI-enabled scams.

Global Estimates

- The World Economic Forum estimates that fake reviews contribute to $200 billion in inflated e-commerce sales annually, an increase of 31% from 2021.

- Fakespot’s analysis of 1.2 billion Amazon reviews flagged 39% as ’unreliable’, with manipulated reviews more prevalent in the electronics and fashion categories.

- A Statista report indicates that 15–30% of online reviews on major platforms, including Amazon, eBay, and Walmart, are suspected to be fake.

Taobao/Tmall Ecosystem

- Taobao, founded in 2003 by Alibaba Group, hosts approximately 10 million active sellers and more than 900 million annual buyers. Tmall, its B2C counterpart, has a market share of 40% in China’s cross-border e-commerce.

- Despite a reported 98% positive feedback rate, China’s National Consumer Association recorded 1.8 million complaints against Taobao and Tmall in 2024, primarily related to product authenticity and review credibility.

- The “brushing” industry in China remains robust, with services offering $0.50–$2.00 per fake review or $30–$80 for comprehensive packages ’five stars + photo + 150 words’.

Mechanisms of Review Manipulation

- Review farms: Organised groups produce templated reviews, recycling text across platforms. A 2024 study found that 60% of fake reviews on Taobao used near-identical phrasing.

- Stylised language and visuals: Reviews with excessive superlatives (e.g., ’life-changing product’) and staged photos increase perceived authenticity by 25%.

- Influencer seeding: Brands collaborate with Key Opinion Leaders (KOLs) on platforms such as Douyin, Xiaohongshu, and Instagram, often posting gifted content without clear disclosure, violating advertising standards.

Consumer Behaviour and Welfare Effects

A natural experiment on 60,000 Taobao transactions revealed the following. Fake five-star reviews boost short-term sales by 15%. Post-purchase dissatisfaction increases by 22%, resulting in a 9% rise in one-star “revenge” reviews when deception is detected. Globally, consumers are misled by fake reviews and spend an average of $10.50 more per order, but report a 0.9-point lower satisfaction score on a 5-point Likert scale.

Regulatory and Platform Responses

- China’s 2019 E-Commerce law imposes fines up to $300,000 for brushing. In 2024, the State Administration for Market Regulation issued penalties totalling $5.2 million in Taobao, Jingdong, and Pinduoduo.

- Alibaba’s ’zero tolerance’ initiative removed 480 million suspicious reviews in 2024, using AI classifiers with a precision of 94%.

- The UK’s Digital Markets, Competition and Consumers Act (2024) allows fines up to 10% of global turnover for review fraud, with the EU’s Digital Services Act (2024) imposing similar penalties.

- Amazon’s transparency programme flags incentivised reviews, reducing their visibility by 30%.

Definition and Nature of Fake Reviews

Fake online reviews involve merchants or individuals who post misleading endorsements or defamatory comments about products or services to manipulate consumer perceptions and gain a competitive edge. These reviews, which may be entirely fabricated, unverifiable, or unrelated to the product, distort market transparency and undermine the credibility of consumer feedback.

Globally, tactics such as offering cash rebates (for example, $0.50–$2.00 per review) or incentives such as gift vouchers for five-star ratings are prevalent, encouraging consumers to post positive reviews regardless of their true experience. This practice, often termed “brushing” in markets such as China, artificially inflates a merchant’s reputation, drowns out negative feedback, and disrupts the platform opinion mining systems, ultimately misleading consumers and skewing purchasing decisions.

Impact on Consumer Purchasing Behaviour

Consumer purchasing behaviour encompasses the stages of demand recognition, alternative evaluation, purchase decision, and post-purchase actions. Fake reviews significantly influence each stage, exploiting information asymmetry and consumer trust.

Demand Recognition

Consumers rely on reviews to assess the value of a product, often influenced by brand impressions and word of mouth. Fake reviews, laden with exaggerated praise or staged visuals, create a false sense of product quality, leading consumers to favour manipulated products over others.

For example, a study found that 60% of fake reviews on Taobao used near-identical phrasing, increasing perceived authenticity by 25%. This manipulation can induce artificial demand, particularly through influencer-driven “fan effects” on platforms such as Instagram or Xiaohongshu, where sponsored posts often lack proper disclosure.

Evaluation of Alternatives

When selecting products, consumers prioritise merchants with high credit scores and numerous positive reviews, which signal trustworthiness and popularity. Fake reviews exploit this by inflating the seller’s rating and review volume, misleading consumers about the quality of a product.

For example, platform recommendation algorithms often prioritise items with high review counts, amplifying the visibility of manipulated products. This creates a cycle where consumers, limited by time and information, gravitate toward seemingly reputable sellers, unaware of the underlying fraud.

Purchase Decisions

Fake reviews streamline consumer decision-making by providing detailed and seemingly authentic information, such as detailed product descriptions or staged photos. Although this reduces decision-making time, it often leads to misguided purchases.

A Taobao study showed that fake five-star reviews increased sales by 15% but raised post-purchase dissatisfaction by 22%, with a 9% uptick in one-star “revenge” reviews when deception was uncovered. Consumers misled by fake reviews spend an average of $10.50 more per order, but report lower satisfaction.

Purchase Behaviour and Long-Term Effects

Although false reviews may drive short-term sales, long-term success depends on the quality of the product. The “fan effect” generated by orchestrated reviews can attract initial customers, but without genuine quality, consumer enthusiasm fades and trust erodes. Companies that rely solely on fake reviews risk loss of market share as dissatisfied consumers share negative feedback, counteracting the initial boost.

The E-Commerce Battlefield: Mapping Key Vulnerabilities to Deepfake Scams

The rise of AI-driven deepfakes has transformed the e-Commerce landscape into a high-stakes battlefield where distinguishing reality from deception is increasingly challenging. Fraudsters exploit vulnerabilities throughout the customer journey, from onboarding to post-purchase support.

The Onboarding Gateway: Exploiting KYC and Video Verification

Digital customer onboarding, particularly for financial services and regulated e-Commerce platforms, is a primary target for deepfake attacks. Know Your Customer (KYC) processes that rely on video-based verification are vulnerable to synthetic identities created with fake videos. Fraudsters use tools such as Deepfakes Web or Faceswapper.ai to bypass facial recognition and rudimentary liveness detection, allowing the creation of fraudulent accounts for money laundering or illicit loans.

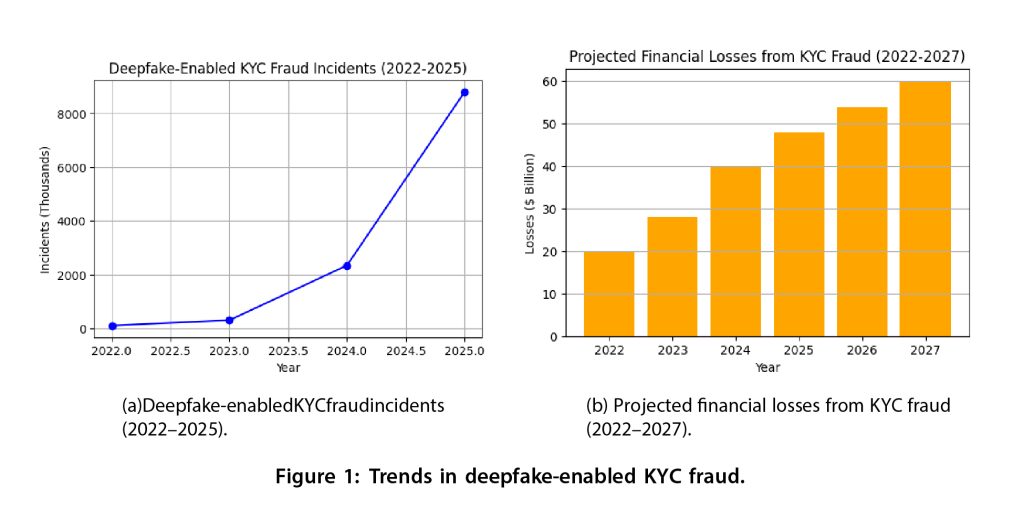

Deepfake-enabled KYC fraud costs global institutions $40 billion, with a projected $60 billion by 2027.Real-time deep-fakes, or ”live fakes”, escalate the threat. Using software to manipulate appearance and voice during live video calls, fraudsters can dynamically respond to verification prompts, deceiving systems relying on photo-to-selfie comparisons. A study reported that 0.1% of people can detect deep fakes in real time, with one attempt occurring every five minutes globally.

The Human Element: Targeting Contact Centres and Customer Support

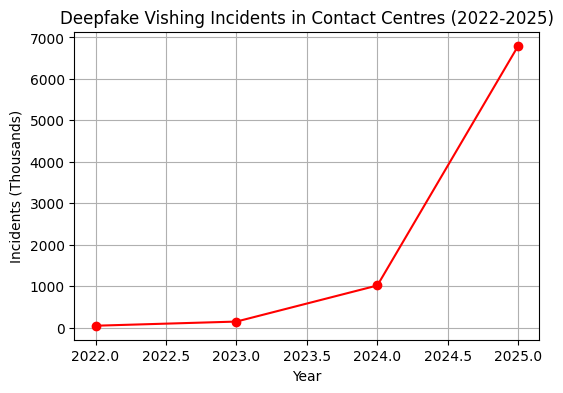

Contact centre agents face a surge in deepfake phishing (voice phishing) attacks, where fraudsters clone customer voices using minimal audio samples from social media. These attacks facilitate fraudulent returns, account detail changes, or loyalty point theft. In 2024, deep fake activity in contact centres increased by 680% year over year, and the insurance sector experienced a spike of 475% in synthetic voice fraud. Approximately 1 in 127 calls to the retail contact centre are fraudulent.

Fraudsters also impersonate executives to manipulate staff into actions like password resets or fund transfers. A notable case from 2024 saw a Hong Kong firm lose $25.6 million after a fake video call impersonated its CFO.

The Mobile Commerce Frontline: Bypassing Biometric Authentication

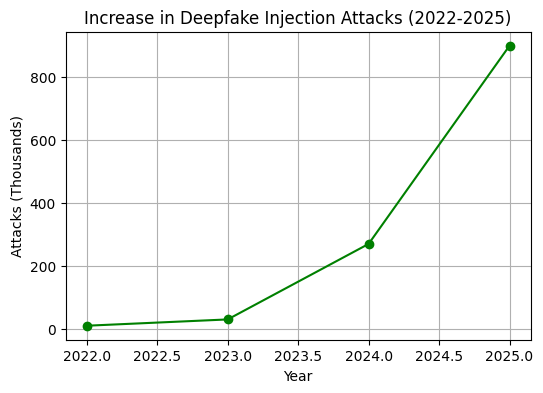

Biometric authentication, such as Face ID and voice unlock, is vulnerable to fake attacks. Fraudsters use AI-generated videos and cloned audio to bypass facial recognition and voice authentication. Injection attacks, which increased 9 times in 2024, bypass device sensors by injecting synthetic media directly into authentication workflows, evading liveness detection. With 187.5 million mobile shoppers in the US in 2024, representing 50% of e-Commerce sales, these vulnerabilities pose significant risks.

The Trust Economy: Quantifying the Financial and Reputational Fallout

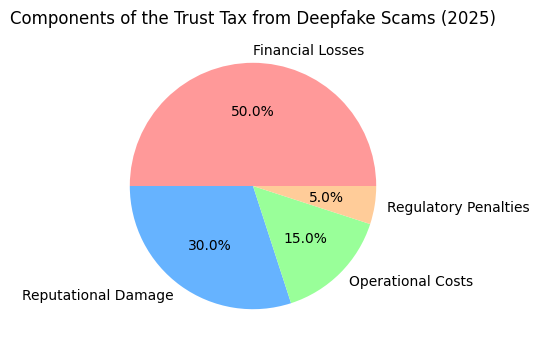

Deepfake attacks impose a “trust tax” on e-commerce, encompassing financial, reputational, and operational costs.

- Direct Financial Loss: Individual deep-fake vishing incidents have caused losses exceeding $1 million, with a 2024 Hong Kong case costing $25.6 million. Mobile commerce losses reach $10,000 per hour for some firms. The total losses from deep fraud are expected to hit $40 billion by 2027.

- Reputational Damage: Breaches erode customer trust, leading to churn and revenue loss. A survey found 85% of finance professionals view deepfake scams as an “existential” threat to brand integrity.

- Increased Operational Costs: Businesses invest in AI-driven detection, staff training, and manual transaction reviews, adding overhead. KYC onboarding costs have increased 20% due to improved security measures.

- Regulatory and Compliance Risk: Non-compliance with KYC and AML regulations incurs penalties. In 2024, European financial firms faced $5.2 million fines for breaches related to the dark web.

Recommendations

- Enhance KYC Systems: Adopt multimodal authentication combining biometrics, behavioural analysis, and real-time deepfake detection.

- Train Staff: Educate contact centre agents about fake identities and implement verification protocols such as executive passcodes.

- Invest in AI detection: Use tools like Reality Defender or Cyble for real-time deepfake detection.

- Regulatory Advocacy: Collaborate with regulators to develop clear fake guidelines, as current AML frameworks predate AI threats.

Anatomy of Deepfake Scams: A Typology of Threats and Defences in E-Commerce

Deepfake scams exploit vulnerabilities in the e-commerce market using various tactics, undermining trust, and causing significant financial losses. By categorising these threats into impersonation, synthetic content, and identity fraud, businesses can develop targeted countermeasures. This article describes these attack vectors, their impacts and effective defences.

1. Impersonation and Social Engineering Attacks

Impersonation scams use deepfake technology to mimic trusted individuals, exploiting human psychology to manipulate victims.

- Customer Impersonation: Fraudsters employ voice cloning or deepfake videos, often using breached data, to pose as legitimate customers. They target contact centres to process fraudulent returns, redirect refunds, alter shipping addresses, or steal loyalty points. In 2024, such scams represented 32% of contact centre fraud, costing retailers $1.5 billion worldwide.

- Executive Impersonation (CEO Fraud): Criminals use voice clones or deepfake video calls to impersonate executives, pressuring employees into urgent wire transfers. A UK case saw a company lose $30 million to a false CFO call. CEO fraud incidents increased 420% from 2023 to 2024.

- Voice Phishing (Vishing) and Family Emergency Scams: Using voice samples from social networks, fraudsters clone voices to impersonate loved ones, claiming emergencies to extort funds. These scams exploded 520% in 2024, particularly targeting elderly consumers.

2. Synthetic Content and Digital Forgery

Synthetic content scams create fake digital assets to deceive consumers at the beginning of their shopping journey.

- AI-Generated Storefronts and Catalogues: Cybercriminals use generative AI to create fake e-commerce sites having photorealistic product images and compelling narratives, often featuring virtual test tools. These sites led to $2.8 billion in consumer losses in 2024.

- Deepfake Testimonials and AI-Generated Reviews: Fraudsters use Large Language Models (LLMs) to produce convincing reviews and deepfake video testimonials. In 2024, 42% of online reviews were considered unreliable, eroding trust and disadvantaging honest merchants. “Review-for-refund” networks amplify this deception.

3. Identity and Application Fraud

Identity fraud leverages deepfakes to create synthetic identities that bypass security checks.

- Synthetic Identities for New Account Fraud: Fraudsters combine stolen data with fabricated details, using deepfake videos to pass KYC biometric checks. These accounts enable money laundering or fraudulent loans, with losses projected at $18 billion by 2027. In 2024, 28% of new account fraud involved synthetic identities.

Typology of Deepfake Scams and Defences

Table 1 : Typology of Deepfake Scams and Defence Mechanisms in E-Commerce

| Category | Tactic | Mechanics | Target | Impact | Defences |

|---|---|---|---|---|---|

| Impersonation | Customer impersonation | Voice cloning, deepfake video | Contact centre | Return fraud, takeover | MFA, detection tools |

| Impersonation | Executive fraud | Voice cloning, video calls | Finance | Large-scale loss | Executive passcodes, training |

| Impersonation | Vishing/family | Voice cloning (TTS) | Staff | Extortion | Voice anomaly detection |

| Synthetic | AI storefronts | AI image + LLMs | Customers | Payment fraud | URL reputation, site checks |

| Synthetic | AI reviews | LLMs, deepfake media | Customers | Trust erosion | Authenticity algorithms |

| Identity fraud | New-account fraud | Deepfake video, synthetic IDs | KYC systems | Money laundering | Multi-modal biometrics |

Regulatory Measures

To combat fake reviews, regulatory frameworks have been implemented worldwide. China’s Anti-Unfair Competition Law (2017) imposes fines of $30,000–$300,000 for misleading commercial practices, with penalties up to $300,000 for serious violations. In 2024, China’s State Administration for Market Regulation levied $5.2 million fines on platforms such as Taobao and Jingdong. The UK’s Digital Markets, Competition and Consumers Act (2024) allows fines up to 10% of global turnover, while the EU’s Digital Services Act (2024) enforces similar measures. Platforms like Amazon and Alibaba have introduced AI-driven review moderation, with Alibaba removing 480 million suspicious reviews in 2024 with 94% precision.

References

- W. E. Forum, “The trillion-dollar cost of fake reviews,” WEF White Paper Series, 2023.

- Fakespot, “2024 global review analysis report,” Fakespot Research Publications, 2024.

- Statista, “E-commerce worldwide – statistics & facts,” Statista Reports, 2024.

- A. Group, “Annual report FY2024,” Alibaba Group Corporate Publications, 2024.

- M. China, “Taobao & tmall statistics and key trends: A look into china’s e-commerce powerhouse,” Marketing China Reports, 2023.